It is likely to be a cliché to say, however the Web actually has been taken by storm by the brand new picture era capabilities of ChatGPT’s 4o mannequin. In every single place you look, mates, acquaintances, and friends-of-friends are sharing AI-edited images that resemble the dreamy and painterly aesthetic of Studio Ghibli movies: The Japanese animation studio, co-founded by Hayao Miyazaki, is thought for its wealthy fantasy worlds and powerful anti-war themes. A lot in order that “Ghiblifying”, “Ghiblify”, or “Ghibli-fication” is used as a verb to explain the phenomenon.

So, it’s apparent that this sweeping development would generate vital controversy and divide the general public into two distinct camps. The primary, a heterogeneous group of AI fans, opportunists/grifters, and fence sitters: Those that have been monitoring the event of Generative AI, weighing its potential advantages and harms, and ready for the proper second to interact.

The second group is of people that abhor AI artwork or something associated to Generative AI. To those people, it’s a soulless imitation and slop; it holds no worth, they usually dismiss the emotional sincerity of customers importing altered pictures of family members, even when motivated by nostalgia or an appreciation for shared cultural references. To them, it’s amoral in each manner.

They imagine this specific expertise has no web profit, comes at a big environmental value, will kill creativity and is constructed upon uncompensated labour and mental property violations. Because of this, many inside this camp are actively calling for extra strong copyright protections and stricter regulation of AI applied sciences.

Additionally Learn | AI in India is trapped in tradition wars

This second camp, nevertheless, could also be ensnared in a rhetorical and ideological paradox. Their stance is formed not solely by a sort of selective historic amnesia but in addition by dominant narratives circulating inside American techno-cultural discourse. Their reactionary posture, often accompanied by a robust sense of ethical certainty, typically turns into a performative act, incentivised by on-line cultures that reward seen dissent and contrarianism.

And we’re all responsible of it, deliberately or unintentionally. Social media platforms reward these actions.

Take into account, as an example, that many inside this group seemingly regard Aaron Swartz—the co-creator of the internet feed format RSS and an open-access advocate—as a determine of ethical readability. Swartz was arrested in 2011 for downloading numerous tutorial journal articles from JSTOR with the objective of constructing them publicly accessible. His loss of life by suicide is broadly seen as the results of overzealous prosecution, and it sparked a motion advocating the free circulation of data. I share that view, i.e., data needs to be free, open, and never monopolised by paywalls or elite establishments.

But there’s a pressure when this similar group calls for stricter copyright legal guidelines solely in response to the proliferation of AI-generated artwork. Such calls, whereas maybe well-intentioned, threat overpowering the very methods they search to dismantle.

The case of Nintendo

Traditionally, expansive copyright regimes have not often served the pursuits of smaller creators; relatively, they have a tendency to consolidate energy within the palms of enormous media conglomerates. In trying to curb the proliferation of generative AI, this group might inadvertently reinforce the authorized and financial buildings they in any other case critique. Basically, they’re strolling right into a entice over ethical panic.

Take, for instance, the case of Nintendo. In 2018, the corporate filed a lawsuit in opposition to the favored ROM-hosting web sites LoveROMS.com and LoveRETRO.co, accusing them of “brazen and mass-scale infringement” of its mental property rights. It’s pertinent to notice that this included ROMs of video games that have been now not commercially obtainable. The case was in the end settled out of courtroom, with the house owners agreeing to pay $12 million in damages (a symbolic determine) and each web sites have been completely taken offline and changed with authorized takedown notices. The lawsuit, as supposed, despatched a chilling message throughout ROM preservation and emulation communities, reinforcing the concept that even archival or non-commercial distribution of older video games wouldn’t be tolerated.

Furthermore, till very lately, Apple prohibited emulator apps on the App Retailer, which is broadly believed to be attributable to considerations over potential copyright violations and lawsuits by Nintendo. It was solely after the European Union’s Digital Markets Act (DMA) got here into impact, requiring Apple to permit third-party app shops on iOS gadgets, that Apple started to calm down its restrictions. Inside these various app shops, emulators shortly gained traction, which prompted Apple to revise its insurance policies to remain aggressive.

In September 2022, the European Fee introduced that the world’s largest digital corporations—designated as “gatekeepers” by the EU—can be required to observe a set of strict guidelines beneath the landmark Digital Markets Act (DMA). On March 25, 2024, the EU launched its first-ever investigations beneath the DMA, concentrating on Apple, Google’s dad or mum firm Alphabet, and Meta.

| Picture Credit score:

Kenzo Tribouillard/AFP

Requires stronger copyright enforcement, particularly as a response to generative AI or digital replica, threat entrenching a authorized and company surroundings the place nobody however the largest rights-holders profit. Relatively than defending particular person creators, such precedents typically find yourself curbing preservation efforts, stifling creativity, and reinforcing monopolistic management over cultural reminiscence. To place it bluntly, it’s a counterproductive train.

The environmental affect of generative AI is one other recurring concern amongst critics, particularly as large-scale fashions have substantial power calls for. Nonetheless, to guage such considerations, one must put them within the broader context of the historical past of computing and modern developments within the subject of AI.

In 2024, Geoffrey Hinton and John J. Hopfield, received the Nobel Prize in Physics “for foundational discoveries and innovations that allow machine studying with synthetic neural networks”. Hinton, specifically, has lengthy been known as the “Godfather of AI” for his pivotal function in reviving neural networks as a viable framework for machine studying

Nonetheless, neural networks—which paved the way in which for at present’s cutting-edge AI methods—weren’t all the time held in such excessive regard. From the late Nineteen Fifties by the early Nineteen Nineties, the dominant strategy in AI analysis was Symbolic AI, which targeted on rule-based, logic-driven methods referred to as knowledgeable methods. These “hand-coded” approaches have been extra interpretable and aligned higher with the computational limits of the time. Neural networks, in contrast, have been broadly considered inefficient and speculative.

They suffered from theoretical bottlenecks such because the XOR downside, famously recognized by Marvin Minsky and Seymour Papert of their 1969 guide Perceptrons. They argued that the neural community fashions of the time, significantly single-layer perceptrons, have been basically restricted. Extra broadly, Minsky and his contemporaries contended that such fashions, whereas efficient for easy duties, lacked the capability to scale to extra advanced cognitive capabilities, plus there have been infrastructural points resembling the shortage of computational energy. These challenges presumably led to declining curiosity and funding in neural networks, contributing to what’s now referred to as the primary AI winter.

Political backing

The broader takeaway isn’t merely about technical progress or stagnation, however of how shortly capital, state curiosity, and institutional focus can coalesce round a single paradigm and the way abruptly that assist can vanish when expectations are usually not met. At present, with the dramatic ascent of Massive Language Fashions (LLMs) and generative picture instruments, we’re witnessing an analogous inflow of world capital and political backing. The prevailing narrative casts this as a crucial path towards Synthetic Common Intelligence (AGI) and even Superintelligence (there isn’t a unanimous definition of those phrases).

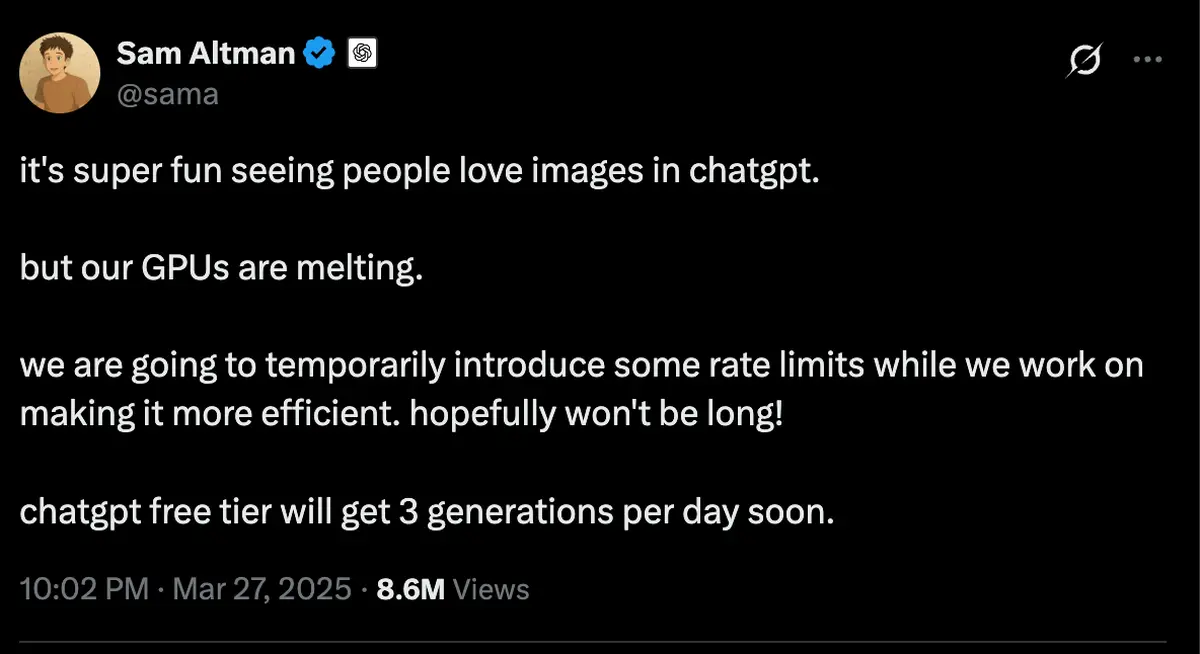

In opposition to this backdrop, responding to the environmental and monetary prices of generative AI isn’t solely an moral necessity however an financial crucial. The accountability for bettering effectivity lies not simply with frontier AI labs resembling OpenAI however extends throughout the broader ecosystem: GPU producers, cloud infrastructure suppliers, and semiconductor corporations included. Indications of infrastructural pressure are already seen: a number of posts by Sam Altman on X, together with the imposition of price limits on picture era fashions of OpenAI, counsel that present demand is exceeding the operational capability of obtainable {hardware}.

If these methods proceed to exhibit excessive power calls for and prohibitive operational prices, the implications for the business shall be vital. Funding capital, by its nature, seeks effectivity and return. Ought to frontier AI corporations fail to cut back prices or display scalable paths to monetisation, they threat a lack of investor confidence. As seen in earlier technological cycles, such circumstances can shortly evaporate funding in addition to institutional religion.

A publish by Sam Altman, CEO of OpenAI, on X, saying short-term price limits on picture era in ChatGPT attributable to excessive demand and GPU pressure.

| Picture Credit score:

Sam Altman on X

One other historic precedent to have a look at is the IBM 350 RAMAC, launched in 1957 as the primary industrial laborious disk drive, which consumed roughly 500 watts of energy whereas providing a storage capability of a mere 3.75 megabytes. Though revolutionary for its time, it was emblematic of the inefficiencies that characterise early-stage applied sciences. Over the following a long time, advances in engineering and the affect of market competitors led to dramatic enhancements in storage density, power effectivity, and cost-effectiveness.

Once more, this historic trajectory provides a helpful parallel for understanding the way forward for generative AI. Whereas present fashions could also be energy-intensive and costly to function, such circumstances don’t final without end. If the historical past of computing is any indication, the viability of general-purpose AI will in the end rely on its capacity to adapt to materials constraints and financial pressures. On this sense, market forces are prone to play the decisive function in shaping the long-term destiny of the sphere.

This isn’t to counsel that generative AI needs to be exempt from critique merely due to its potential. Quite the opposite, essential engagement is crucial, significantly on condition that the story of this expertise isn’t solely one in all computational effectivity but in addition one in all labour, displacement, and inequality. Nonetheless, such criticism should be grounded within the political, financial, and institutional realities of the current second.

When nations resembling France and India co-chair world initiatives such because the AI Motion Summit, and when the Vice President of the US publicly asserts that American AI should stay the worldwide “gold customary”—calling concurrently for innovation and regulatory leniency—it turns into clear that the Overton window has shifted. The discourse has moved decisively in favour of fast adoption, and so too has the capital. The overwhelming majority of world funding, private and non-private, is now flowing into generative AI and adjoining fields.

Gray areas between ethical extremes

On this context, appeals to ethical absolutism threat dropping political efficacy if not accompanied by sensible engagement. Coverage can’t rely solely on advantage signalling: whereas such positioning might carry symbolic weight, they typically lack sturdiness within the face of advanced technological and financial realities. Furthermore, human behaviour not often operates in absolutes. It’s standard knowledge that individuals are likely to perform within the gray areas between ethical extremes. The extra urgent query is now not whether or not generative AI ought to exist however how it may be responsibly formed, ruled, and built-in into public life.

Additionally Learn | AI’s technological revolution: Promised land or a pipe dream?

How may generative AI be thoughtfully integrated into schooling methods? What safeguards are wanted for younger learners who might come to depend on AI regardless of having weak foundational expertise? Anecdotal indicators of this shift are already seen: Gen Z reportedly refers back to the em sprint because the “ChatGPT hyphen”, i.e., the erosion of fundamental vocabulary and writing conventions is already underway. And maybe most urgently, how can societies anticipate and mitigate the labour disruptions these applied sciences are prone to intensify?

Whether or not one embraces or resists these developments, it’s clear that generative AI has entered a part of mass adoption, and the latest “Ghibli-fication” development is a living proof. The crucial now could be to proceed with strategic foresight and institutional care. Market forces have already accelerated this transition: the true problem is to make sure that public coverage, social dynamics, and moral inquiry evolve in tandem.

Kalim Ahmed is a author and an open-source researcher who focuses on tech accountability, disinformation, and overseas info manipulation and interference (FIMI).